Highlights from the 5th International HTPD Conference, Part 3

The High-Throughput Process Development (HTPD) Conference Series is the key international forum for the presentation and discussion of topics relevant to high-throughput process development and “smart process development” for biopharmaceuticals.

The first of three articles from the conference provided takeaways from the preconference day of the HTPD Conference which focused on introducing high-throughput process development. The second article focused on “Application of Modern HTPD” and this third article will focus on “Smart process development” and related analytics; and give a look into the future.

What is Smart process development and what can be achieved?

HTPD allows users to harvest a sea full of data. But as professor Jürgen Hubbuch of Karlsruhe Inst. of Technology (KIT), Germany highlighted at the opening of HTPD conference, while HTPD provides a “sea of data”, there is a natural tendency to mainly use it to confirm expectations and our thinking, while a large part of the information is unused and discarded unseen. Are we thus smart in how we are using our resources? The term smart process development most often includes the use and potentials of in-silico models, soft sensors and data mining approaches such as multivariate data analysis (MVDA), quantitative structure-activity relationship (QSAR), mechanistic modeling, or artificial intelligence (AI) to help direct, focus, or reduce screening efforts.

Boehringer Ingelheim Pharma, Biberbach, Germany (BI) and KIT presented (1) a case study related to cation exchange chromatography (CIEX) unit operation development based on smart process development modeling. It was noted that the main causes why established chromatographic and other unit operations do not yield similar results for similar targets is often due to unforeseen challenges such as target denaturation, aggregation, protease susceptibility, glycosylation, specific interactions with salts or detergents, and other individual target factors. HTPD is an excellent choice for screening for such factors and when coupled to more in depth sequence, 3D structural knowledge can allow for understanding both the reason for failure and approaches that may provide solutions.

In the presented case study, the complex and time-consuming tasks of model calibration were reduced by using quantitative structure-property relationship (QSPR) models. mAb primary sequence data was used to create target homology models, which were then correlated with high-throughput and lab-scale experimental results. The approach was validated regarding its ability to predict entire chromatograms for two mAbs not included in the training set.

In a related presentation, GoSilico, BI and KIT (2) presented a more rigorous mechanistic approach to analyze partition coefficient (Kp) data related to mAbs or higher molecular weight (MW) species and simulated linear gradient elution, 5 g/L target feed, and the CIEX behavior. Tobias Hahn from GoSilico showed that it is possible to predict column runs from filter-plate experiments and understand the process design space before doing more complex lab-scale experiments.

Large data sets and scalable data management systems

Michael Chinn et al. of Genentech (3) noted that “as high-throughput chromatography process development technologies reach maturity, computational modeling approaches, such as machine learning and mechanistic modeling, represent the next frontier in downstream process development for biologics.” Highlighting the need for large training sets of data, the talk focused on how to generate workflows to rapidly generate HTPD data and incorporate it into scalable data management systems. Michael noted that present models are fairly accurate and can be used as basis for Monte Carlo Process Simulation. They can draw on existing (previous) data from small- and large-scale laboratory studies, to significantly reduce the need for more bench-scale experiments. The goal is to be able to move from modeling, to RoboColumn™ unit verifications in early stage process development, and on to bench scale at late-stage process development.

Both HTPD and smart process-development approaches are most established for mAbs. But the approaches are of course not limited to this area. Brian Murray presented a fully automated platform approach being developed by Sanofi, for Fc-containing molecules including mAbs, bispecific mAbs, trispecific mAbs, etc. (4). “The platform approach relies on predefined experiments for multiple resins and modes of chromatography to accommodate modalities of varying complexity” and the fully automated experiments can be done in one week. To achieve these timelines, full automation is required, including:

- standardized experiments defined by molecular properties,

- automated buffer preparation and protein titrations,

- chromatography process development studies—including variable loads at fixed residence times, and automated measurements, analysis, and decision-making for iterative experiments.

Over three days of continuous experimentation, their approach generates 100 pH and conductivity data points, 1500 concentration measurements, and 200 impurity analyses. The data supports statistical analyses designed to successfully define orthogonal process architectures and operating ranges.

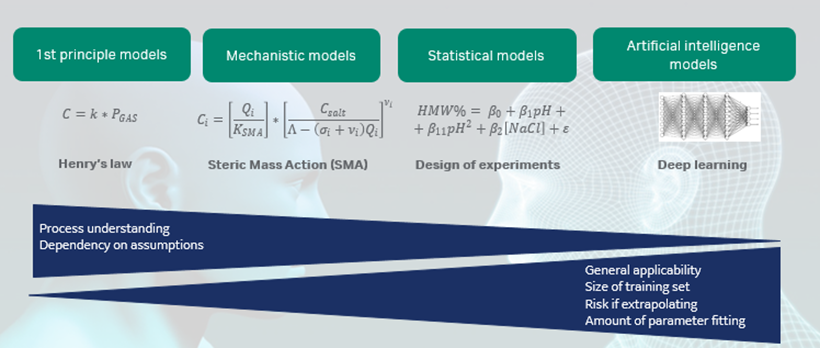

Gunnar Malmquist, Cytiva discussed if mechanistic modeling is the ultimate HTPD tool (5). He stated that mechanistic modeling is a component of the modeling continuum (Fig 1) and the importance will only grow in the future. However, mechanistic modeling involves a steep learning curve that requires investment in infrastructure as well as in employee effort. Depending on the size of the PD department, in-house development or outsourcing should be considered. It takes time to generate a useful model but once that is accomplished, accurate results can be rapidly generated.

Dr. Malmquist stressed that good analytical support is crucial for accurate and timely mechanistic modeling. He cited examples where the analytical results for charge variants and high molecular weights (HMW) are treated as if they are independent or orthogonal. In reality, the outcome of the two chromatographic assays are inter-related. This means that the elution profiles for the individual charge variants are amalgamated with the HMW profile, which complicates the mechanistic modeling.

Fig 1. Overview of the modeling landscape. In comparison to statistical models that are common in our industry today, mechanistic models are to a higher degree built on interpretation of the physical phenomenon and require less data than statistical models.

Smart PD is not limited to chromatography

Lee Lior Hoffmann of Genentech (6) discussed the dynamic development of high throughput screening (HTS) and noted that by the end of 2019, her group would have screened 6500 constructs including further evaluating approx. 4000 constructs which gave reasonable expression. They use standard robotic workstations and advanced algorithms developed inhouse, which include Boomerang for workflow and analysis, coupled to Spotfire analytical dashboards and Python based nucleic acid analysis. 2D gel images have proved extra challenging and they now automatically label gel photos with axes and normal HCP protein peak labels. Automating plate assignment (and optimization) had a large positive impact on throughput. These simple changes to enhance HTS were highlighted by a number of presenters.

On the upstream side, modeling of a fully automated microbial fermentation platform at Boehringer Ingelheim in Vienna was shown by Joseph Newton (7). The platform could carry out 100 fed-batch fermentation runs per week at up to 5 L bench-scale. The large amount of data generated on the platform opens up a wide range of opportunities for mechanistic or statistical modeling applications, enabling smart PD and optimization.

A recent machine learning model study predicted process conditions that led to a 15% increase in target titer compared with more traditional optimization via DoE. They noted that, “The major obstacle for widespread industrial use of model-based technologies is a lack of standardized methodologies for modeling approaches”.

Challenges related to integrated process modeling (IPM) and machine-learning techniques to inclusion body solubilization and refolding were presented by Cornelia Walther and colleagues of BI, Vienna and The University of Natural Resources and Life Sciences, Vienna, Austria (8). Over four months, HTPD generated > 10 000 data points related to solubilization (pH, chaotrope type, chaotrope concentration, pH, conductivity), refolding, and capture operations.

The IPM could be optimized (e.g., with an improved refolding buffer) to provide for a process capable of using existing plant equipment. When compared to the existing process, it was found to offer a doubling of target protein yield. The IPM allowed further refinement of the refolding buffer to allow target to be fed directly onto the follow-on capture chromatography column.

Buffer optimization reduced the yield gain to 1.8-fold but improved dynamic binding capacity (DBC) so as that a 35% smaller column bed volume could be used. These achievements related to considerable cost savings.

Significance of analytics and novel assay development

A key takeaway from the HTPD conference was the significance of analytics and novel assay development, particularly in regard to new targets and the challenge HTPD provides with a very high number of experiments to be analyzed. In a presentation related to elimination of analytical roadblocks, Robert Dinger of Aachen University (9) explained the significance of developing an HTS method for monitoring biological respiration in single wells of 96 deep-well microplates. Such respiration is often monitored in larger fermenter vessels using RAMOS™ technology and oxygen partial pressure sensors, as over time it is a good indicator of culture conditions. Their development of an optoelectrical sensor that could monitor individual MT wells allowed studies to be conducted at 0.5 mL versus 10 mL volumes and for a 7-fold increase in throughput, 15-fold increase in measurement efficiency, and 20-fold reduction in media consumption per cultivation.

Chris Daniels of MSD presented on HTS of cell substrates for oncolytic virus production (10) and noted that early identification and development of a suitable assay for viral viability was critical to success of a rapid screening process. Normal plaque assays are not very automatable and of limited use for HTS. MSD were able to develop a high-throughput version of the Spearman-Karber TCID50 assay.

The new assay showed good correlation with plaque assay controls. Chris noted that there are advantages to HTPD teams owning their own assays, one of which is that linked HTPD and assay technologies enhance the possibility for future machine learning and automation. Sheng-Ching Wang of MSD outlined downstream virus vaccine production efforts to develop an inhouse screening platform and its application to virus purification using chromatography capture (11).

HTS studies involving 40 plates and 5 resins can yield 4000 data points in one week. Inhouse technology termed “Platebuddy” allows them to organize and handle the large amount of data associated with screening new capture steps. In a recent study they screened 17 chemistries related to a new capture step—including one anion exchange chromatography (AIEX) membrane, plus several chromatography resins including nine pseudo affinity, nine AIEX, one mixed mode (multimodal) chromatography (MM) and three CIEX types of varying base matrix, pore size distribution, and particle size.

The frontier of HTPD and smart PD approaches

QbD and smart PD joining forces

The conference concluded by looking at the future and how smart PD can join forces with QbD to support process understanding and robustness. Peter Hagwall of Cytiva presented on the interplay between chromatography resin variability and process parameters (12) opening the door for smart risk assessment and control strategies as another dimension of smart PD. A summary of recent interactions with the biomanufacturing industry, involving resin development, process development, and root cause investigations was given. Data of the impact of resin variability on industry-relevant separations was presented together with new tools for smarter process characterization.

Chromatography modeling without doing any chromatography column run

Steven Benner et al., of Merck & Co discussed the possibility of modeling chromatography without doing any chromatography column runs at all (13). They noted that HTPD allows for the large data sets required for statistical modeling, while mechanistic modeling uses first principle models to reduce data set size.

“A combination of these approaches represents an ideal case where all the data needed to calibrate a mechanistic model could be generated in a single high-throughput experiment.” They presented an attempt to calibrate a chromatography model for a Protein A step by using only pulse tests and high-throughput slurry plate data. Mass transfer limited, and bind-elute kinetic complications were identified, which led to the utilization of alternative approaches such as shallow bed chromatography.

The result of this work was a hybrid mechanistic/empirical model that could closely predict the Protein A elution profile using a variety of techniques.

Predicting drug-product stability

The power of HTPD to enhance other areas of biopharmaceutical production such as formulation, was also shown in a presentation by David Smithson et al., of Genentech, South San Francisco, on predicting drug product stability (14). Evaluating new product formulations by conducting (thermal enhanced) stability studies in real time significantly increases project timelines. In some cases, the results are not predictive of longer-term degradation; especially at 5°C. Computational and experimental stability model systems can possibly address such problems.

However, placing appropriate limits on existing models and methods is still a challenge. Genentech work with physicochemical based models to understand formulation-dependent stability effects such as aspargine deamination or methionine oxidation. But in contrast to some other areas, they do not have the thousands of data inputs needed for QSAR or the millions required for machine learning.

Final impressions and wrapping up

The various talks and posters related to smart PD and related modeling and analytical methods indicated this as one of the hottest areas of HTPD. It is no longer a question if such modeling will be useful but rather how to make it more useful by understanding what modeling elements and methods will provide the needed information at the required level of detail.

Several speakers noted that practitioners are struggling with analytical bottlenecks, big data management, and standardization—within regional and international organizations as well as between organizations. Steven Cramer noted that for academics, who cannot readily build up large training sets related to new or otherwise rare target molecules, a common database that just listed some protein descriptors and results could be of interest. Protein sequence data is nice but not needed as much as common descriptors (size, MW, charges at different pH, net charge at pH, hydrophobicity descriptor values) as well as any practical chromatographic results.

Smart PD presentations included strategies involving new modalities, novel formulations, connected and continuous processing, new unit operations, PD project acceleration and support for health authority submissions. They also included focus on novel therapeutics such as cell, gene, nucleic acids, and vaccines all of which exhibit novel production, analytical, and formulation/delivery challenges. A common strength of HTPD and Smart PD approaches is their suitability for a wide range of therapies from cell- to protein-based.

The need for improved and standardized analytics came up in various talks and post-presentation discussions. This includes 2D gel and proteomics data related to protein contaminants. The meeting attendees noted in an open, anonymous, electronic canvass conducted via mobile phone that their companies´ pipelines included not only mAbs (71%), bispecific mAbs (55%), mAb fragments (48%), antibody-drug conjugates, ADCs (29%) or non-mAb-related proteins (54%) but also virus or viral vectors (51%), and cell therapies (23%). A key analytical challenge is that new targets such as those related to nucleic acid, exosomes, viral- or cell-based therapies, often require potency or viability-related assays, which take time.

Critical materials attributes, process attributes, and product attributes will continue to be characterized by HTPD so as enable QbD-facilitated “systemic” and “risk-understanding”. Advancements in techniques related to target and contaminant characterization, molecular modeling, quantitative structure-activity relationships (QSAR), at-line and on-line process monitoring, as well as improved understanding of separation process related transport and surface phenomena are synergistically merging to support a new dawn for bioprocessing. Rather than understand the nuances of existing processes it is becoming possible to use “in silico” methods to cost-effectively design optimal processes and separation approaches. Smart PD-related methodologies are also expanding upstream (e. g., cell culture and viral vector development) and further “downstream to formulation (e. g., drug product stability).

The area of HTPD and smart process development is still an area of development. Attendees at the 5th international HTPD conference were looking forward to the next conference.

At the end of February 2020, Cytiva and GoSilico agreed to co-market ChromXTM mechanistic modeling software for preparative liquid chromatography processes.

This tool simulates and predicts chromatographic performance, enabling a deeper understanding of the outcome of existing and future purification processes. As such, ChromX can reduce the number of experiments required during process development, and provides both time and cost savings.

Sofie Stille, General Manager Resins at Cytiva, noted: “As process development becomes more data and simulation driven, mechanistic modeling is proving to be an important tool. Together with GoSilico, we aim to support our customers achieve efficient and robust process outcomes.” Dr. Thiemo Huuk, CEO and co-founder of GoSilico, said: “Mechanistic models accelerate process development and can leverage benefits along the entire product life cycle. Together, we will empower our customers in the United States to digitalize downstream development even faster and to boost the added value.”

The best poster award went to Felix Wittkopp, Eda Isik, Birgit Weydanz, and Christiane Werrstein, of Roche Diagnostics GmbH, Pharma Research and Early Development (pRED), Penzberg, Germany. The poster, titled “Smart process development – Use cases for mechanistic modeling in early-stage process development” presented two case study examples related to early stage PD of a complex new target format. They used mechanistic modeling to calculate and evaluate a salt step elution for a chromatography polishing step involving seven protein impurities. The second example showed their approach to determine robust pooling criteria in a chromatographic separation involving several critical process parameters. “Both models showed a good correlation with experimental data and helped the project team in informed decision making.” Dr. Wittkop et al., used ChromX software from GoSilico, GmbH to aid their experimental design, simulation, and workflows.

- From sequence to process - in silico DSP development based on quantitative structure-property relationships

David Saleh*, 1, 2 , Michelle Ahlers-Hesse1, Federico Rischawy1, Gang Wang1, Simon Kluters1, Joey Studts1, Jürgen Hubbuch2

1 Boehringer Ingelheim Pharma GmbH & Co. KG, Biberach, Germany, 2 Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany - Mechanistic identification of CEX elution conditions for an antibody aggregate separation using Kp screening

Tobias Hahn1, * , David Saleh2, 3, Nora Geng¹, Simon Kluters², Jürgen Hubbuch³

¹ GoSilico GmbH, Karlsruhe, Germany, ² Boehringer Ingelheim Pharma GmbH & Co. KG, Biberach, Germany, ³ Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany

- Using High Throughput Screening and Data Management Solutions to Enable Mechanistic Modeling of Chromatography Processes

Michael Chinn*, Jessica Yang, Derek Lee, Bella Del Hierro, Kenny Huynh

Genentech, South San Francisco, CA, USA

- Fully Automated Platform Approach to FIH Purification Development: mAbs and Beyond

Brian Murray*, 1, Tridevi Dahal-Busfield1, Arjun Bhadouria1, Martina Fischer2, and Kevin Brower1

1 Sanofi Genzyme, Framingham, MA, USA, 2 Sanofi, Frankfurt am Main, Germany - Mechanistic modeling—the ultimate HTPD tool?

Gunnar Malmquist*, Peter Hagwall

Cytiva, Uppsala, Sweden - The Dynamic Development of High Throughput Data and Systems for Ever-Evolving Science

Michal Maciejewski, Idris Mustafa, Edward Kraft, Yvonne Franke, Lee Lior-Hoffmann

Genentech, South San Francisco, USA

- Modelling approaches with a fully-automated microbial fermentation platform

Joseph Newton*,1, Annina Sawatzki1, Vignesh Rajamanickam1, 2, Jeannine Gesson1, Stefan Haider1, Sandra Abad1, Daniela Reinisch1

1 Boehringer Ingelheim RCV GmbH & Co KG, Vienna, Austria, 2 Institute of Chemical Engineering, Technische Universität Wien, Vienna, Austria - Application of integrated process modelling and machine learning techniques in high-throughput process development

Cornelia Walther*, 1, Martin Voigtmann1, 2, Joseph Newton1, Ali Abusnina3, Elena Bruna3, Anne-Luise Tscheliessnig1, Michael Allmer1, Sandra Abad1, Cécile Brocard1, Daniela Reinisch1, Alexandra Föttinger-Vacha1, Jochen Gerlach1, Herman Schuchnigg1, Georg Klima1

1 Boehringer-Ingelheim RCV, Vienna, Austria, 2 University of Natural Resources and Life Sciences Vienna, Vienna, Austria, 3 BI-X, Ingelheim, Germany - High Throughput Online Monitoring of Biological Respiration Activities in Single Wells of 96-Deepwell Microtiterplates

Robert Dinger*, 1, Clemens Lattermann2, David Flitsch3, Jochen Büchs1

1 Aachen University, Aachen, Germany, 2 Kuhner Shaker GmbH, Herzogenrath, Germany, 3 PyroScience GmbH, Aachen, Germany - High-Throughput Screening of Cell Substrates for an Oncolytic Virus to Accelerate Process Development

Chris Daniels*, Ryan Katz, Murphy Poplyk, Marc Wenger

MSD Research Laboratories, West Point, PA, USA - Automated High throughput Chromatography Screening Platform for Virus Purification

Sheng-Ching Wang*, Spyridon Konstantinidis, Marc D. Wenger

Vaccines Process Development, MRL, MSD, West Point, PA, USA - QbD and smart PD join forces: understanding the interplay between resin variability and process parameters

Peter Hagwall and Gunnar Malmquist

Cytiva, Uppsala, Sweden - Modeling chromatography without doing chromatography: Is it possible?

Steven W. Benner, John P. Welsh, Jennifer M. Pollard

Merck & Co., Inc., Kenilworth, NJ, USA - Peering Through the Crystal Ball - Predicting Drug Product Stability at Genentech

David Smithson*, Jonathan Zarzar, Saeed Izadi, Jia Sun, Kate Tschudi, Sam Burns, Cleo Salisbury, Nisana Andersen

Genentech, Inc, South San Francisco, USA