The safety and efficacy of a biopharmaceutical drug are of the highest priority. Therefore, manufacturers have to design, implement and maintain measures to ensure that the bioprocess constantly delivers a high-quality product and patients are never at risk. The robustness of the manufacturing process has to be validated in order to bring a new biological drug to market.

Regulatory agencies such as the Food and Drug Administration (FDA) and European Medicines Agency (EMA) demand strict requirements on process characterization and validation studies and ask for a deep process understanding. Mechanistic process models provide advantages over other methods for meeting these requirements, and they can be applied in all stages of process characterization and validation (PC/PV) from process design space development up to linkage studies.

Process characterization and validation

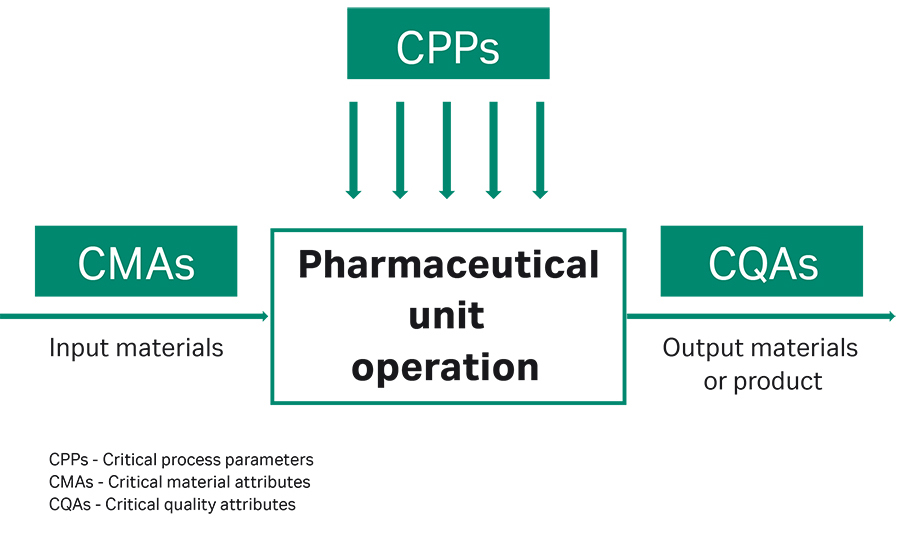

Fig 1. Depiction of the connection between CMAs, CPPs, and CQAs

Process characterization and validation (PC/PV) include all activities for establishing scientific and documentary evidence demonstrating that a production process is compliant at all stages and will consistently lead to a defined quality target product profile (QTPP).

The focus of these activities is to investigate the interplay between critical material attributes (CMAs), critical process parameters (CPPs), and critical quality attributes (CQAs) (Fig 1). Identifying CMAs and CPPs and understanding the connection between CMAs, CPPs, and CQAs is not only key to running a future manufacturing process at optimal conditions, but also plays an important role towards regulatory process validation for market admission.

PC/PV activities start from the process design stage throughout production and play a key role in bioprocess development. The activities can be described in three stages:

- Process design: Based on knowledge and process understanding gained during development and scale-up activities, the commercial production process is defined.

- Process qualification: The process design is confirmed and reproducible manufacture of the target product at the desired level of quality is ensured.

- Continued process verification: During routine production, the stability and robustness as well as the continuous and controlled running of the process are ensured.

ICH and the concept of quality-by-design (QbD)

Regulatory agencies such as the FDA and EMA pose rigorous demands for market admission and process validation. A global platform to discuss and harmonize local requirements for drug registration is provided by the International Council for Harmonisation of Technical Requirements for Pharmaceuticals in Human Use (ICH). Founded in 1990, the ICH has created a forum for various regulators and the pharmaceutical industry to discuss scientific and technical aspects and release guidelines for the implementation of regulatory demands. One of the key merits of ICH is the introduction to the pharmaceutical industry of Quality-by-Design (QbD).

PC/PV under the QbD paradigm

PC/PV activities under the QbD paradigm include:

- Risk-ranking and filtering (RRF) for the identification of potential critical process parameters (pCPPs) and potential critical material attributes (pCMAs) using platform knowledge and product-specific data. RRF helps to reduce the number of parameters that need to be considered in the following experimental investigation. RRF relies on platform knowledge but can also involve experimental studies such as Design of Experiments (DoE).

- Multivariate DoEs to investigate the effect of pCPPs and pCMAs on CQAs and to define critical process parameters (CPPs) and critical material attributes (CMAs) with appropriate parameter ranges.

- Linkage studies of different unit operations under worst-case conditions.

Since experimental studies cannot be performed on a full commercial scale but only on scale-down models (SDM), the SDM needs to be qualified to ensure that it is representative of the full commercial-scale system. SDM qualification is commonly performed by comparing results on the SDM with data on full scale but only under target conditions. Process performance qualification (PPQ) runs are often used for this purpose. However, to achieve statistical significance, Phase III manufacturing runs are often included as well.

Risk-ranking and filtering, identification of CPPs, and linkage studies, as well as scale-down model qualification come with a significant risk of underestimating the criticality of certain parameters. The evidence that can be gathered from simple one-factor-at-a-time experimental studies or by DoEs is limited. Higher order impacts or the interaction of multiple CPPs is typically not sufficiently covered.

One trend for reducing the risk associated with the limited experimental evidence in PC/PV is the massive increase of experimental data, e.g., by using high-throughput experimentation (HTE). Unfortunately, using HTE significantly increases the complexity of SDM qualification. Another approach is to use mechanistic process models for reducing the risk associated with PC/PV and SDM qualification.

ICH Q8(2) suggests mathematical models

The concept of QbD fundamentally relies on process understanding. The ICH Q8(2) guideline on pharmaceutical development suggests the use of mechanistic models to enhance process understanding. ICH explicitly recommends using a combination of Design of Experiments, mathematical models or studies, leading to a mechanistic understanding. In 2011, the FDA and EMA continued to present their expectations for a proven process understanding in their Guidance for Industry and highlighted practical implementation strategies based on mathematical process models.

Statistical vs mechanistic downstream models

Mathematical models can be built upon two different paradigms: Statistical models and mechanistic models, sometimes also denoted as first principle models. Statistical approaches extract information from large data sets and build a model based upon parameter correlations. They do not need any previous information or understanding of the process which is being modeled. Design of Experiments and multivariate data analysis (MVDA) are two commonly used techniques for building statistical models in bioprocessing, but recent developments in machine learning, artificial intelligence and big data also rely on statistical models.

Mechanistic models are derived from fundamental physico-chemical principles and only need a small data set for calibration. In contrast to statistical models, they immediately disclose the causality of how process parameters and material attributes affect quality. Only mechanistic models have physically meaningful parameters and allow a profound and scientific process understanding. Mechanistic models can be applied in all stages of PC/PV from RRF and process design space development up to linkage studies.

Further reading on process characterization and process validation

Robust scale-down models for chromatography process validation.