Digital transformation has become an increasingly important topic for the biopharmaceutical industry as it leads to new opportunities for additional value creation and competitive advantage. Digital biomanufacturing takes advantage of the internet of things to connect contrasting sources of data, equipment, materials, and people. In combination with artificial intelligence, augmented reality, robotics, and digital twins, biopharma 4.0 changes legacy concepts from the ground up.

The benefits of biopharma 4.0

Benefits arise along the entire value chain, starting from developing new product candidates and the associated manufacturing processes, up to regulatory aspects, market approval, manufacturing, quality, and much more:

- Digital lab folders and data lakes enable a standardized aggregation of structured and contextualized data through the entire organization. Data hubs are a prerequisite for most pharma 4.0 applications.

- Big data and artificial intelligence intelligence are used to screen potential target molecules virtually and play a key role towards personalized medicine.

- Digital twins of production assets enable more efficient operations, flexible and agile process designs, shorter time to market, lower process development costs, and an improved process understanding.

- Virtual process control strategies and soft sensors sensors allow real-time process monitoring and control, and release testing of the product.

- Virtual and augmented reality support the design of the manufacturing facilities of the future, while increasing efficiency and reducing downtime in existing plants. Operator training and product change-overs become more efficient.

Digital twins in the biopharmaceutical industry

A digital twin is commonly understood as a virtual representation of a real-world process that allows understanding, optimization, and monitoring of the process. Industries such as aircraft or automotive engineering fundamentally rely on the application of digital twins from concept to design, up to acquirement, manufacturing, and service.

The virtual representation is typically accomplished by precise process simulation, based either on statistical approaches such as data analytics, machine learning, or on fundamental natural sciences. Digital twins based on process simulation have revolutionized many industries, including, amongst others, the chemical industry.

Digital twins drive the next generation of bioprocesses

Although other industries seem to be far ahead, the digital twins of bioprocesses have started to affect the biopharmaceutical industry as well. As a game-changing solution, digital twins allow the replacement of laboratory experiments with in silico simulations, enabling a cheap and fast environment for research, development and innovation. Digital bioprocess twins have an enormous potential for value creation.

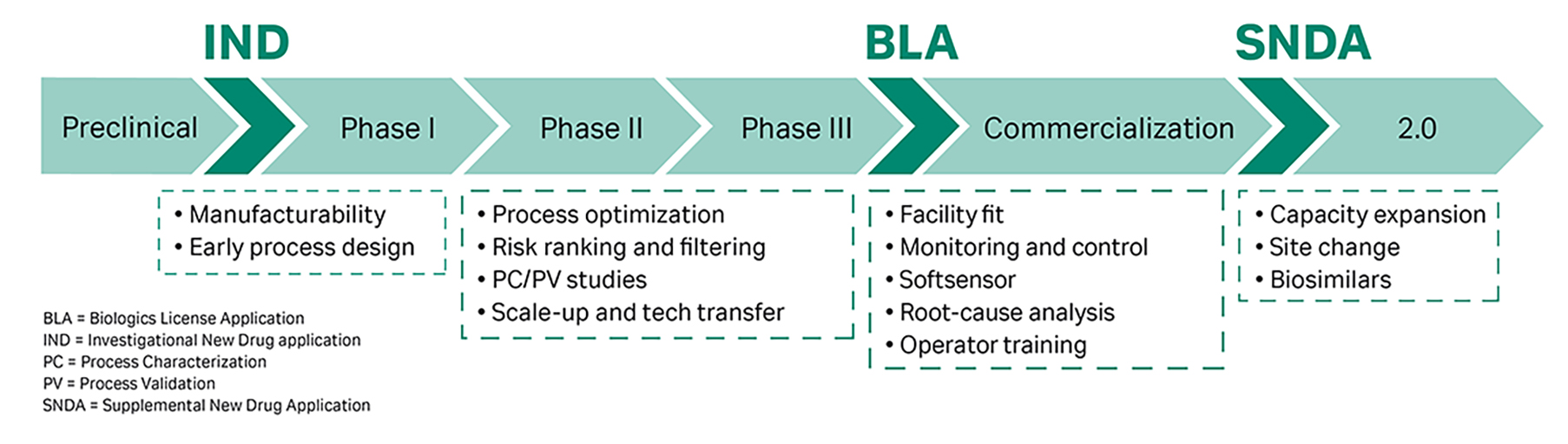

Fig 1. Areas of application for in silico downstream simulation throughout the bioprocessing life cycle.

Digital bioprocess twins: mechanistic vs statistical models

Digital twins of bioprocesses can be accomplished with statistical or mechanistic models. It is essential to consider the differences, since both modeling concepts have their pros and cons. In a nutshell:

- Mechanistic models require a profound and fundamental understanding of the driving mechanisms, denoted in terms of physical and mathematical equations.

- Statistical models are the only feasible method for processes with limited fundamental and quantitative understanding, e.g., living systems like the entire metabolism of a cell.

- Statistical models are typically the method of choice for technical processes with low geometrical complexity such as a bioreactor which can be simplified to a stirred tank reactor.

- Mechanistic models are required to simulate processes with high complexity, such as chromatography or filtration processes. For example, all effects along the chromatography column and within the adsorber pores contribute to the final chromatogram. Statistical models are typically not able to represent this level of complexity. Instead, they oversimplify the digital twin, which may jeopardize the project success

To be compliant with regulatory requirements on process understanding and Quality-by-Design, the International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use considerations (ICH) further suggests utilizing mechanistic models for process characterization and validation (PC/PV).

Digital twins based on mechanistic models turn data into deep process knowledge

Digital downstream twins built from mechanistic models turn sparse process data from development or manufacturing into deep process understanding. This knowledge is expressed in the form of model equations and parameters, allowing the development and management of process knowledge in simple, clear, and precise language.

Compared to experimental approaches, they allow more and better data to be gathered as well as sound and scientific decision-making along the entire product lifecycle to be leveraged. Associated risks can be mitigated early and at the lowest costs.

Integrated in a development lab or a manufacturing site, digital twins built from mechanistic models constitute soft sensors and thereby facilitate a data-based approach for more effective biopharma process control and root-cause analysis.

Can we use chemical tools for downstream process simulation?

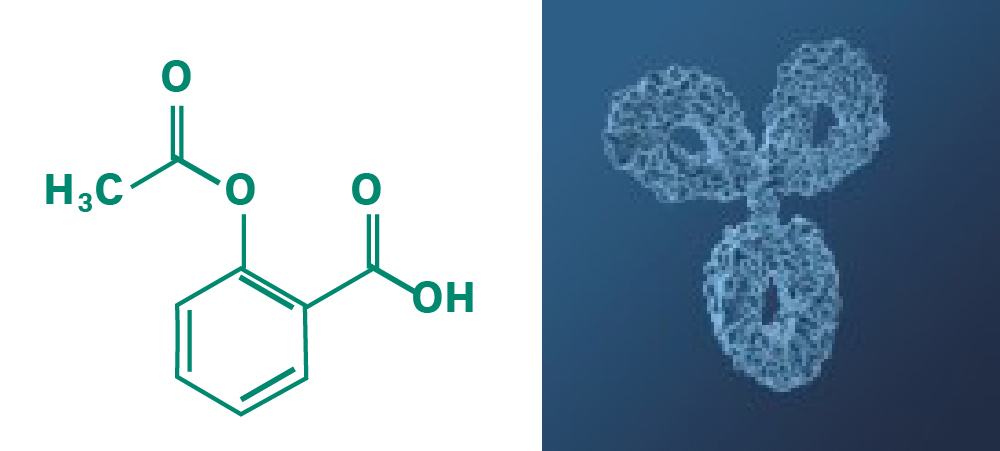

For decades, downstream process simulation tools have played a crucial role in the chemical industry. The core mechanisms in chemical downstream processing may seem like those in biopharmaceutical downstream processing. However, biopharmaceuticals are significantly more complex than small chemical molecules. Therefore, mechanistic simulation tools for chemical processes do not qualify for biopharmaceutical process simulation.

Biopharmaceutical process simulation is an extremely interdisciplinary affair. It requires a fundamental understanding of biotechnological processes, substantial knowledge in applied mathematics, solid software programming skills, and extensive industry knowledge. Despite the popularity in the chemical industry, biopharmaceutical downstream simulation has remained an academic topic for decades.

Fig 2. Size of pharmaceuticals: A typical chemical molecule (acetylsalicylic acid) is composed of 21 atoms; an antibody is composed of more than 25,000 atoms

GoSilico™ Chromatography Modeling Software is accelerating biopharma process simulation

GoSilico™ Chromatography Modeling Software overcomes the barrier of complexity and opens the door for digital bioprocess twins for large biomolecules. The software is based on a new generation of physical and biochemical models that accurately describe the behavior of large proteins. State-of-the-art mathematics leads to unsurpassed simulation speed and model calibration techniques using machine learning to minimize the need for expert knowledge and human error.

The GoSilico™ Chromatography Modeling Software enables the full utilization of mechanistic models by providing an accurate, fast, and robust simulation framework that is also easy to use.

See how this technology is changing downstream bioprocessing