Until now biopharmaceutical process development has relied heavily on experiments including design of experiments (DoE) and high throughput process development (HTPD). In silico process simulation based on mechanistic models speeds up process development and provides a more fundamental understanding of the behavior of a process. This leads to reduced costs and risks as value is realized throughout the process development stages.

A review of approaches in downstream processing (DSP)

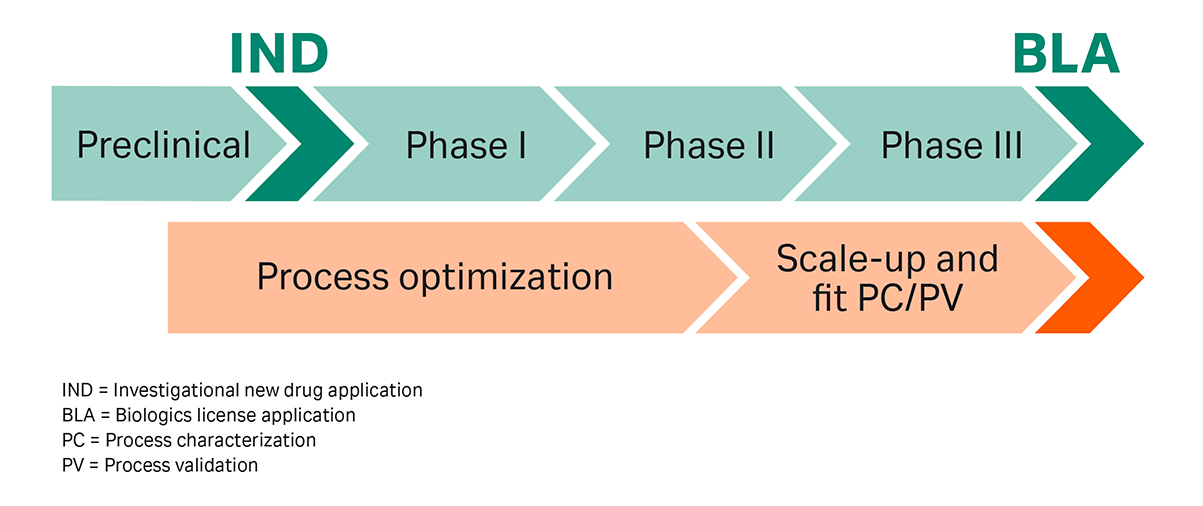

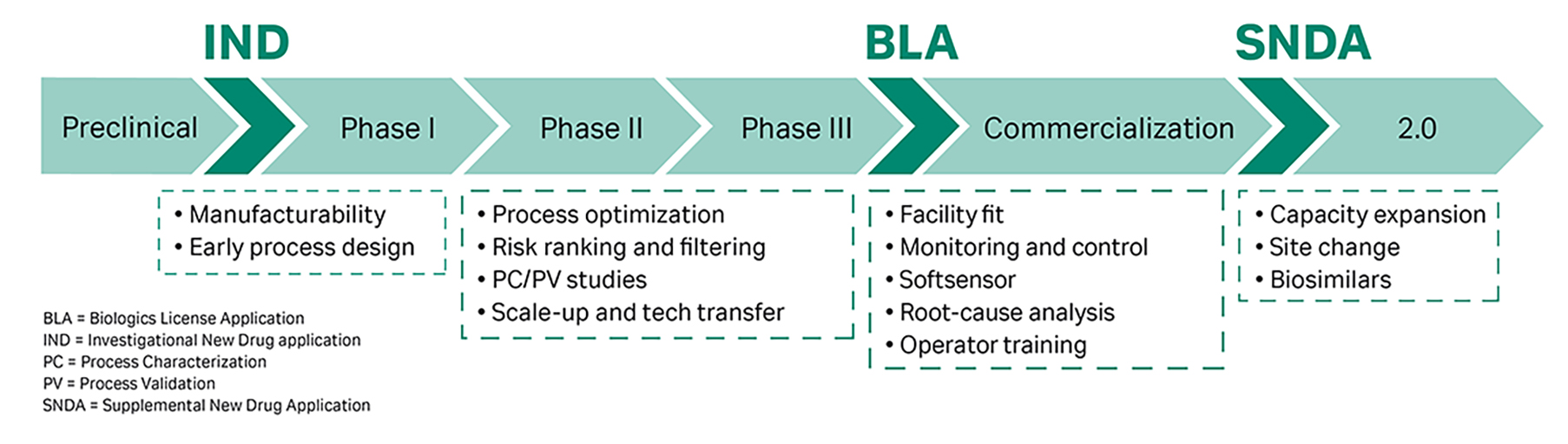

The goal of pharmaceutical development is to design a manufacturing process that consistently ensures the safety and efficacy of the drug. Product quality considerations are therefore key while developing a downstream purification process throughout the lifecycle of a product and can thus lead to high development costs. The different phases of DSP development can be divided and characterized as shown in Figure 1.

Fig 1. The phases of biopharma downstream development.

Early process development phases are driven by the need to increase “speed to clinic” and enable a rapid entry into clinical development. Process development activities at that point are limited to a minimum; nevertheless, the requirements on product quality are still high. This often results in uneconomic processes during Phase I and II. In addition, these processes are generally less robust when compared to commercial production.

Due to the high failure rate of products in Phases I and II, time-consuming and expensive development steps are backloaded as far as possible. Once a drug candidate is in the advanced clinical development phases, chemistry, manufacturing, and control activities (CMC) are intensified to prepare the drug candidate for Phase III and commercialization as soon as possible. Above all, these include process characterization and validation (PC/PV) activities, which encompass elaborated experimental studies and can become a significant development bottleneck in late-stage process development.

It is thus a key decision whether to ‘backload’ in order to save money or to ‘frontload’ in order to gain speed and avoid development bottlenecks for potentially successful candidates. To resolve bottlenecks in DSP development and to fulfill short timelines and quality requirements, several techniques have evolved to accelerate downstream development and increase downstream development efficiency.

Accelerating downstream process development

The experimental effort for DSP development can be challenging. There is a variety of downstream separation techniques and combinations that can be applied. However, each of these unit operations depend on many process parameters and material attributes which need to be optimized and validated for robustness. With the limited amount of resources available in DSP development, an experimental investigation of all parameter combinations becomes more challenging.

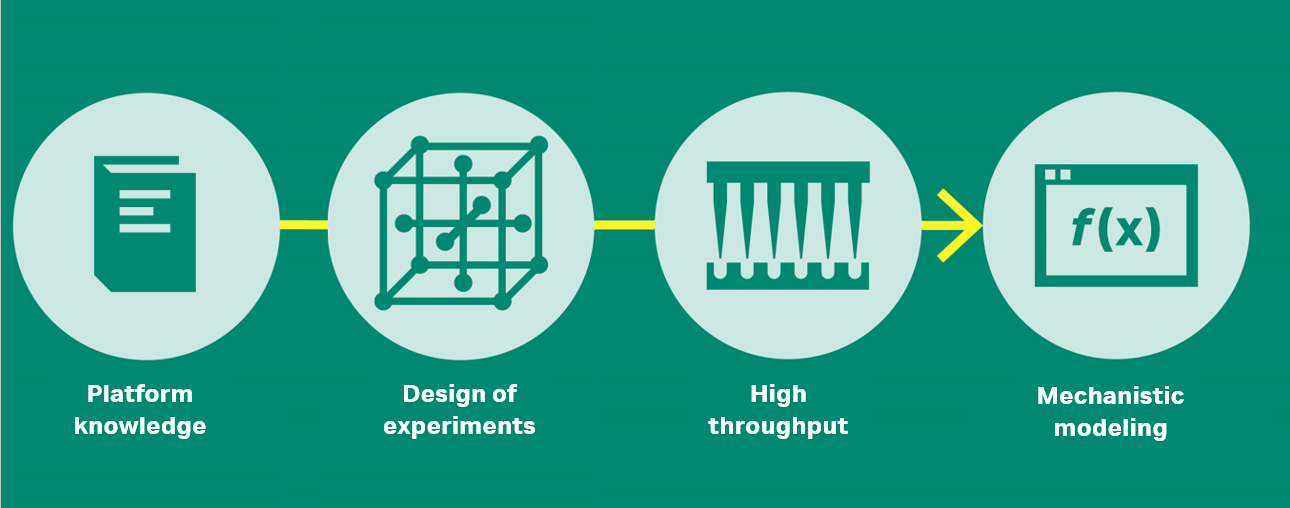

Fig 2. The evolution of biopharma downstream development.

Platform knowledge — do, learn, repeat

Process understanding is not only developed through product-specific experimental studies but is also heavily based on platform knowledge generated from previous molecules and processes. Platform knowledge is used to simplify and standardize process development and can even lead to the definition of a platform manufacturing process. The vast number of potential combinations of unit operations is thereby reduced to a generic sequence of unit operations. The optimization of each unit operation is often limited too, e.g., a small number of chromatography adsorbers and buffer candidates that were effective in past development activities.

Platform knowledge also plays a significant role in late-stage process development: Within a risk-based framework, it can be used to identify non-critical process parameters, allowing resources to be focused on the investigation of critical process parameters (see PC/PV).

Platform knowledge is very efficient, but can present some limitations:

- Platform processes are specific to a certain class of molecules. A diversified pipeline limits a broader application of platforms.

- Process development is always a balance between speed and optimization. Platform processes place emphasis on speed, which is combined with the risk of non-optimal processes.

- Restricting an organization to platform processes can pose the risk of losing more specific development capabilities.

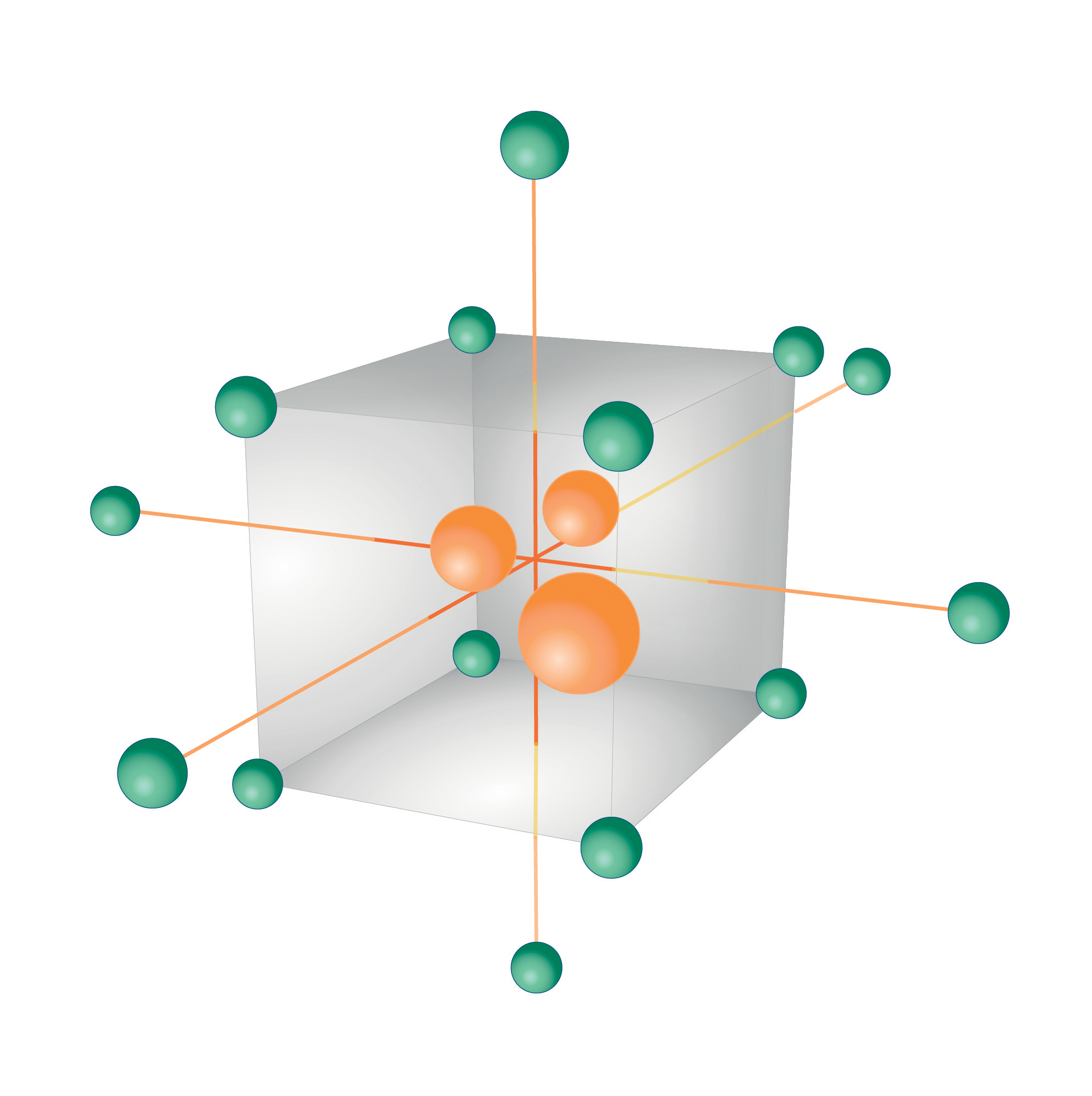

Design of experiments (DoE) — do less, but do it right

Design of Experiment (DoE) is a statistical technique that allows practitioners to use their limited resources as efficiently as possible and to extract as much information as possible from experimental data. In process development, DoE usually includes not only the optimal design of experiments, but also data analytics and the evaluation of the gathered experimental data using statistical process models. The underlying mathematics rely on linear or quadratic interpolations of the experimental data. Thanks to numerous DoE software solutions, the statistical design and evaluation of experiments has become an indispensable tool in process development. It plays a particularly decisive role during PC/PV, where large experimental studies are used to generate process understanding. DoE can help to identify non-critical process parameters with little experimental effort and to focus resources on investigating the effect of critical process parameters on process performance and product quality.

DoE is very powerful, but may presents some limitations, such as:

- A statistical model does not provide any physically meaningful model parameters as it is based on simple interpolations.

- A huge number of experiments are needed to build a statistical model.

- The resulting model is only valid within the calibrated parameter range, which restricts its application in other development activities.

Fig 3. Design of experiments

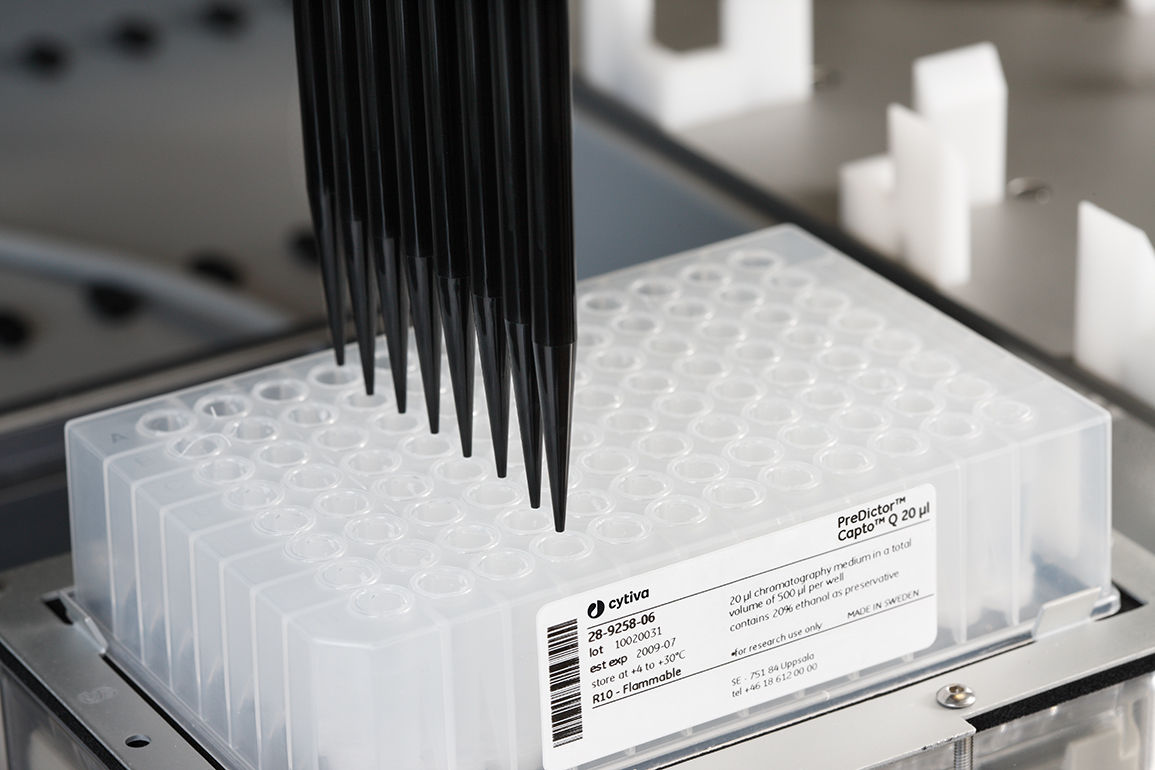

High-throughput process development (HTPD) — do more in parallel

As the experimental effort in DSP development is large, high-throughput experimentation (HTE) approaches have been developed to perform experiments in a miniaturized, parallelized, and automated manner on robotic systems. Due to the high experimental throughput and low sample volume per experiment required, HTPD has become particularly important for early-phase process development.

Fig 4. A lab robot executing high throughput process development (HTPD).

Several HTE techniques have been developed, covering chromatography and filtration processes. Batch chromatography has been used to screen different chromatography resins and find optimal binding and elution conditions. Miniaturized chromatography columns have gained increasing attention in recent years as a scale-down model for process optimization and PC/PV activities.

Small-scale systems for filtration processes enable, at early development stages, a stability analysis of the product in terms of buffer composition and shear stress. Due to the significant miniaturization of HTE systems, there are significant scale differences to full-scale commercial systems, which makes it more complicated to interpret data from HTE. For the qualification of these scale-down models, it must be ensured that they are representative of the full-scale system.

HTPD is a great way to generate large amounts of data, but it also has some limitations, such as:

- The data quality in HTPD is typically far lower than those derived from traditional benchtop systems.

- The larger number of experiments can be challenging if the throughput of the analytical methods cannot keep up.

- A full scale down model qualification for all process parameters is typically not possible.

In silico process development — all about process knowledge

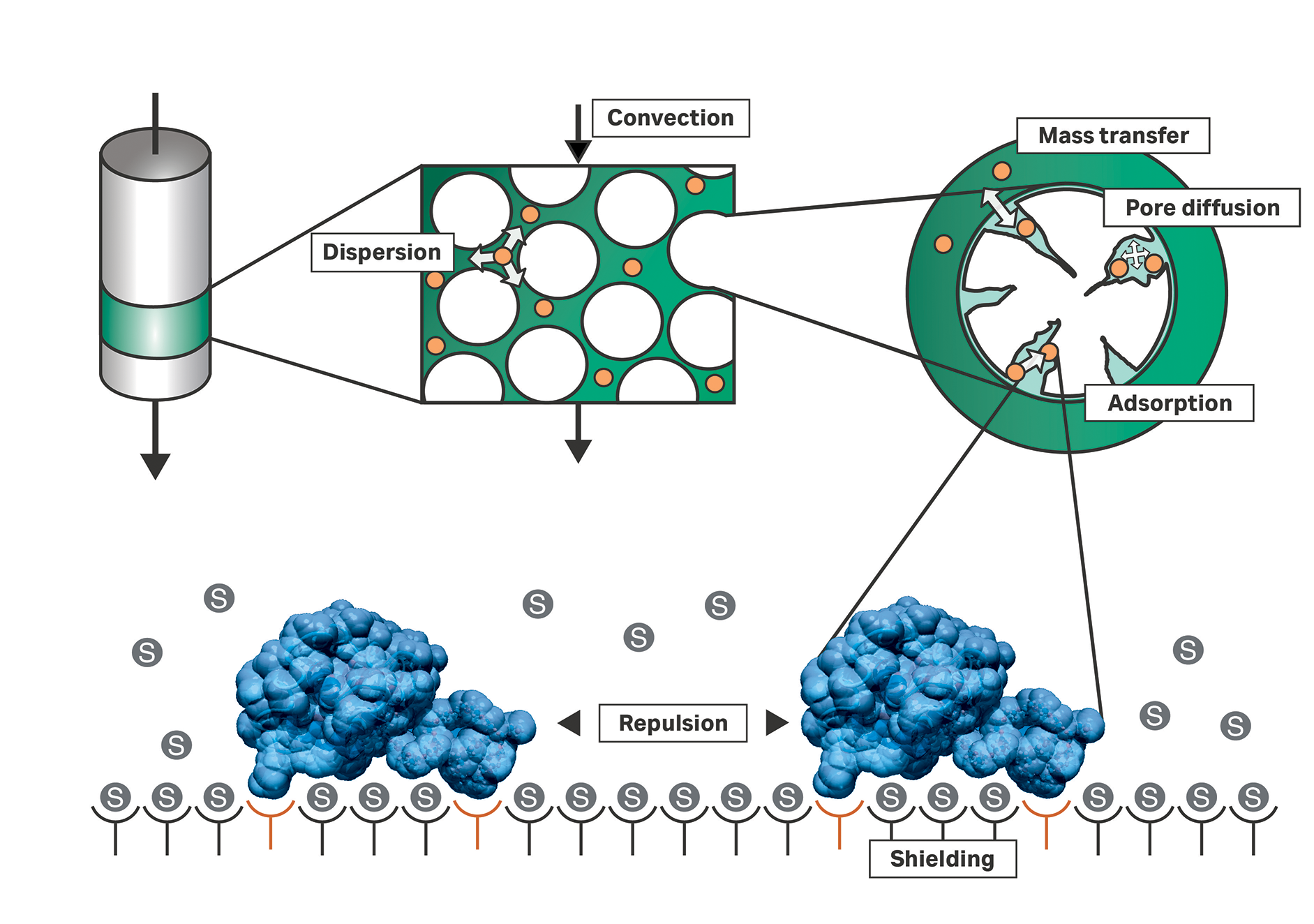

Fig 5. Depiction of the basic principles of a chromatography column.

DoE and HTPD have led to a more efficient and targeted use of available resources in DSP development. However, too many experiments are still required to gain profound process understanding and to ensure consistent product quality. In addition, the process knowledge generated by DoE and HTPD is restricted to the experimentally investigated parameters and ranges, which presents a severe constraint.

In silico process development based on mechanistic models can provide a more fundamental understanding of the behavior of a process:

- It generates a mechanistic understanding of the process parameters and material attributes affecting process performance and product quality.

- It provides insights into the effect of process parameters and parameter ranges outside the experimental investigations.

- It decreases the number of experiments in process development while increasing the amount of extracted process knowledge.

In this way, in silico process development can be seen as the next evolutionary stage of process development, following DoE and HTPD:

- Firstly, a mechanistic model is built based on an experimental data set much smaller than for a DoE study with the only goal being to create a holistic understanding of the process.

- Secondly, the mechanistic model is used to investigate a number of process scenarios by means of cheap and quick computer simulations.

Mechanistic models bring benefits over the whole product value chain

Fig 6. Areas of application for in silico downstream simulation throughout the bioprocessing life cycle.

Mechanistic models provide significant benefits along the entire life cycle of a biopharmaceutical product (Figure 6). In the early stages of the product life cycle, a simple process model calibrated to a small set of experimental data can be used to design a first downstream process. At these initial stages, the model can reduce the time to clinics. In addition, the initial model already provides a deeper process understanding than traditional experimental studies or Design of Experiments (DoE). This process understanding pays off when starting up the production of clinical material and handling any unexpected behavior during those clinical batches.

At any stage of the product life cycle, further experimental data can be fed into the mechanistic model to improve its predictive capabilities. The resulting high-quality models can be utilized to design and optimize the final manufacturing process, and to generate holistic process understanding. Mechanistic models thus provide a valuable data source for a fact-based risk ranking and filtering (RRF), as well as subsequent process characterization and process validation (PCPV) studies. The deep process knowledge provided is not only relevant for quality by design (QbD)-based filings, but also key for robust and economic operations.

Mechanistic models, e.g., for chromatography or filtration, can predict process scenarios beyond the calibrated data space, including changed process conditions and facilities. Therefore, mechanistic models enable a seamless scale up of processes or transfer into another manufacturing scaffold. The risk introduced by changes to the manufacturing strategy, e.g., from gradient elution to step wise elution or batch-wise to continuous processes, can be mitigated with ease.

With a mechanistic process model at hand, plant operators can be trained on the digital twin of a downstream process unit, while the construction or validation of a new facility is still in progress. Even rarely occurring manufacturing failures or scenarios which cannot be reproduced in the real facility, can be simulated and trained on the computer model. Once regular operations have started, soft sensors powered by a mechanistic model enable direct insights, e.g., into the chromatography column. In case of deviations or unclear process behavior, the mechanistic model can be used as a root-cause investigation tool, to identify and understand the chain of causation of the failure and to identify preventive actions.

In the case the manufacturing process needs to be changed after the license has been granted or if a capacity expansion requires post approval changes, the knowledge gathered in a mechanistic model is a key to smooth change management.