By Paul Belcher, Product Strategy Manager, and Martin Teichert, Global Product Manager, Cytiva

The pharmaceutical industry remains under pressure to address the high attrition rates in drug development. Despite this pressure, the efficiency of research and development of new drugs in the US falls by half every nine years (Erooms Law).1 These factors combined mean that developers have more samples to test, more information to collect, and less time to make decisions.

There’s a demand for information-rich technologies that can be applied earlier in the drug discovery process to support these changing needs. Some of the existing tools for data analysis have improved — but developers are still faced with manual, time-consuming methods that haven’t scaled with instrument throughput.

That is, until now. The need to increase efficiency and productivity has led scientists to develop a machine learning solution that can optimize the way that developers analyze and characterize their early drug candidates.

Finding the needle in an information-rich haystack

Surface plasmon resonance (SPR) is a real-time and information-rich analysis technique that scientists use throughout drug discovery. SPR technology detects and characterizes the binding of molecular or chemical entities to their targets and provides valuable information on affinity and kinetic parameters. These insights can help guide decision making, enabling the development and advancement of new drugs to the clinic.

Today, SPR is an essential tool in low molecular weight drug discovery. That’s because it has the sensitivity required to detect and characterize low molecular weight and low affinity compounds binding to their target. SPR doesn’t just identify hits — it quantifies binding, so scientists can rank and characterize compounds based on their affinity, kinetics, and ligand efficiencies. This information is critical to supporting ongoing structure activity efforts in hit-to-lead development, helping scientists advance hits to chemistry and lead optimization. With the potential to meet increasing demand for real-time, biophysical data to support decision-making, scientists are applying SPR platforms earlier and earlier in the drug discovery workflow.

However, to date, the methods for analyzing information from optical biosensors like SPR haven’t scaled with increasing instrument throughput. Data analysis today is still a manual, multistep process, and developers can still end up spending excessive amounts of time on processing, understanding, and interpreting large amounts of data.

The typical solutions to this growing challenge have been to either work hard or hire more people. But these solutions aren’t scalable, and can introduce bias and inconsistencies. For example, unintentional blindness is the natural human tendency to overlook the unexpected. It happens when we analyze large datasets, and makes us influenced or biased by what we’ve seen in the previous set of data points. The problem with this type of cognitive illusion is that it’s difficult to avoid even when we’re aware of it, and it can prevent experts from the serendipitous and accidental findings that have led to major breakthroughs in the pharmaceutical industry throughout history.

The power of expectation can mean we only see what we expect to see. As Sir Alexander Fleming put it when discussing his discovery of penicillin, “…I certainly didn’t plan to revolutionize all medicine by discovering the world’s first antibiotic, or bacteria killer. But I guess that was exactly what I did.”2 Additionally, Cytiva’s internal research shows that user-to-user variability or variability within the same user, depending on the time of day or the day of analysis, can be anywhere from 10% to 30%.

Even if it were possible to add more people to tackle this data bottleneck and overcome inherent human biases, it would still require significant investments in time and resources to properly train a growing team. Regardless of the biophysical technique utilized, education and onboarding for biophysical data analysis can take weeks, months, or more than a year. So, where do we go from here?

Building trust in AI solutions

Efforts to tackle the issues with data analysis have led to improved software solutions, flatter interfaces, automated corrections, and referencing of data. Still, even these advancements haven’t addressed data bottlenecks enough to significantly improve the efficiency of day-to-day activities.

Artificial intelligence is a growing field that can handle manual, tedious steps and free up time for developers. But the use of AI for data analysis in drug discovery applications has mainly been limited to high content image analysis. Specifically for biophysics, the applications of AI have been limited to improving peak detection in nuclear magnetic resonance and mass spectrometry.

There are several reasons why we haven’t fully adopted AI to analyze large data sets in drug discovery —for example, there’s limited access to the large amounts of high-quality data needed to train algorithms. There’s also a lack of understanding and public hesitation toward AI technology, with Elon Musk describing AI as even more dangerous than nuclear weapons.3 While AI-based, planning algorithms are straightforward and easy to explain, other AI technologies are more complex. The relationship between input and output might just be represented as a box with data going in and results coming out, giving us limited insight on what’s actually happening in between.

These technologies can help overcome inconsistencies in analysis and save time for developers, but in doing so, they also reduce the information-rich data from SPR into only a series of binary responses ― yes or no, accept or reject ― without any explanation on how that decision was reached. Now there’s a new question to face ― how do we learn to trust a decision if we have no idea how it was made? In drug discovery, overlooking errors can lead us to move forward with wrong or even dangerous compounds, and force us to abandon candidates in later stages.

Transparent machine learning can offer the solution. By allowing us to understand how the output from an algorithm was determined via visualization and annotation, transparent machine learning can build the trust we need in AI solutions.

Cytiva’s machine learning prototype

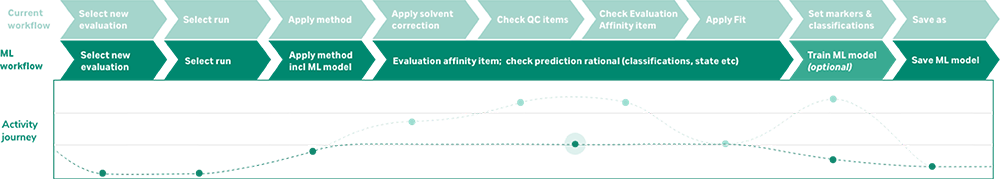

At Cytiva, we are developing a trainable prototype machine learning solution for analyzing and characterizing Biacore™ SPR data. Our solution defines models by a set of features or classifications based on what an expert is looking for when evaluating data in different types of applications. Depending on their needs, scientists can train and adjust the algorithm as their investigation changes and progresses. This approach reduces the time it takes to analyze data by eliminating manual steps, reducing error, and improving consistency. Our SPR team pretrains the software to help standardize workflows and save time for onboarding new users.

To measure and validate the model’s performance, Cytiva deployed its solution at user sites over the course of a year. Our results show up to a 90% reduction in analysis time — saving one collaborator more than eight hours per experiment on assays they run multiple times per a week. By eliminating the time-consuming manual steps of curating and visually assessing data both pre- and post-fitting, our transparent machine learning prototype achieved significant outcomes for developers throughout the industry (Figure 1).

Figure 1: Significant reduction in data analysis times with Cytiva machine learning prototype.

Cytiva collaborators also found this approach can improve consistency in data analysis when analyzing large data sets. Compared to analysis performed by Biacore™ experts, the prototype corroborated their findings more than 95% of the time. These time savings can allow developers to support multiple projects simultaneously, as well as run additional biophysical assays to increase confidence in the compounds they want to advance.

With only 10% of drugs in Phase I clinical trials likely to reach FDA approval, it’s critical that we design and adopt more efficient methods and technologies to improve workflows in the early phases of drug discovery so that better quality candidates are taken to the clinical phase.4 Advancements in AI, such as Cytiva’s machine learning solution, can provide a smarter and faster way to conclusive results — improving productivity, reducing the time to results, and ultimately helping to advance and accelerate the development of novel therapeutics.

- Nosengo N., Can you teach old drugs new tricks? Nature. 2016;534:314–6.

- Tan, S. Y., & Tatsumura, Y. (2015). Alexander Fleming (1881-1955): Discoverer of penicillin. Singapore medical journal, 56(7), 366–367. https://doi.org/10.11622/smedj.2015105

- Clifford, C., Elon Musk: ‘Mark my words — A.I. is far more dangerous than nukes’ (2018). CNBC. https://www.cnbc.com/2018/03/13/elon-musk-at-sxsw-a-i-is-more-dangerous-than-nuclear-weapons.html

- Takebe, T., Imai, R., & Ono, S. (2018). The Current Status of Drug Discovery and Development as Originated in United States Academia: The Influence of Industrial and Academic Collaboration on Drug Discovery and Development. Clinical and translational science, 11(6), 597–606. https://doi.org/10.1111/cts.12577